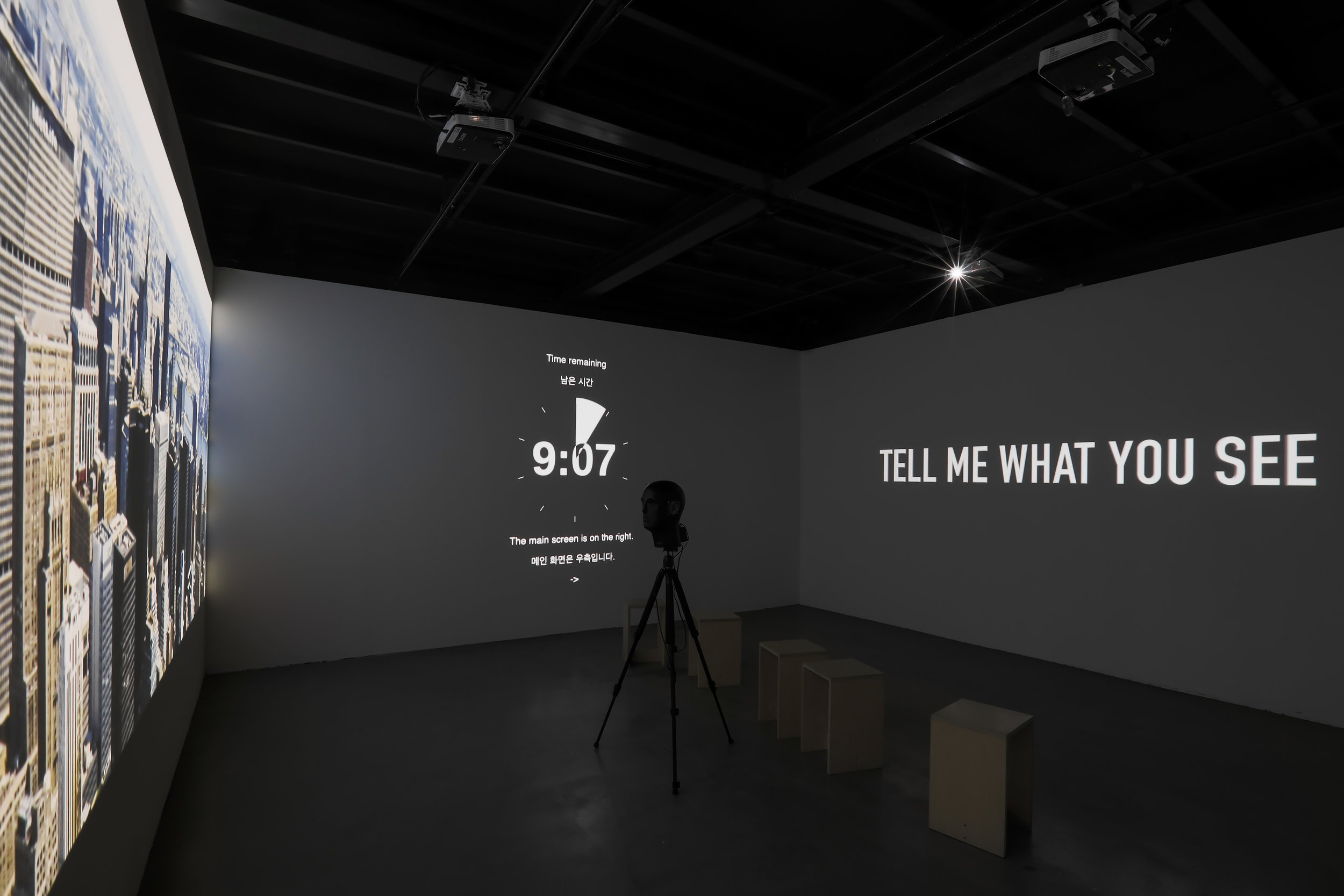

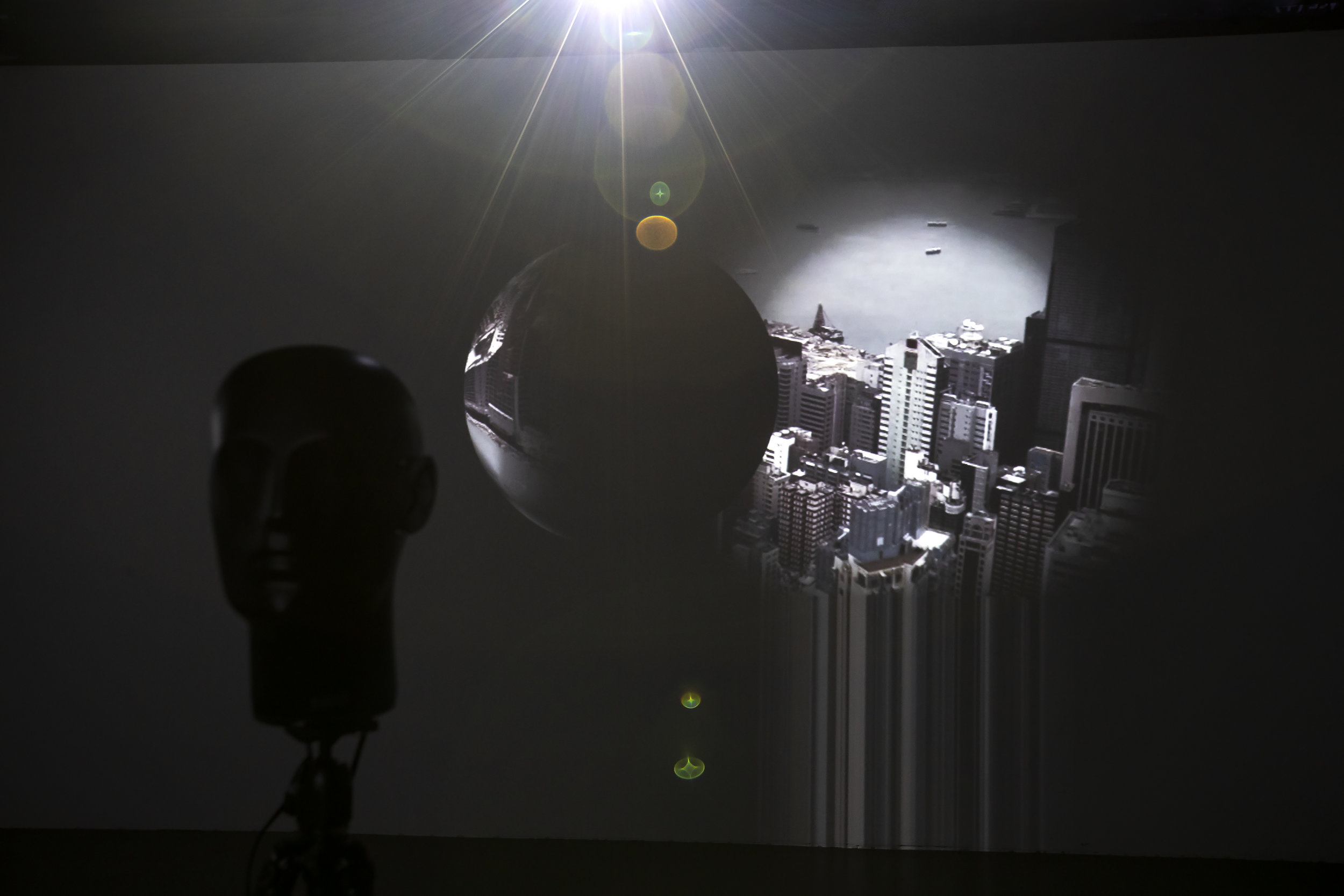

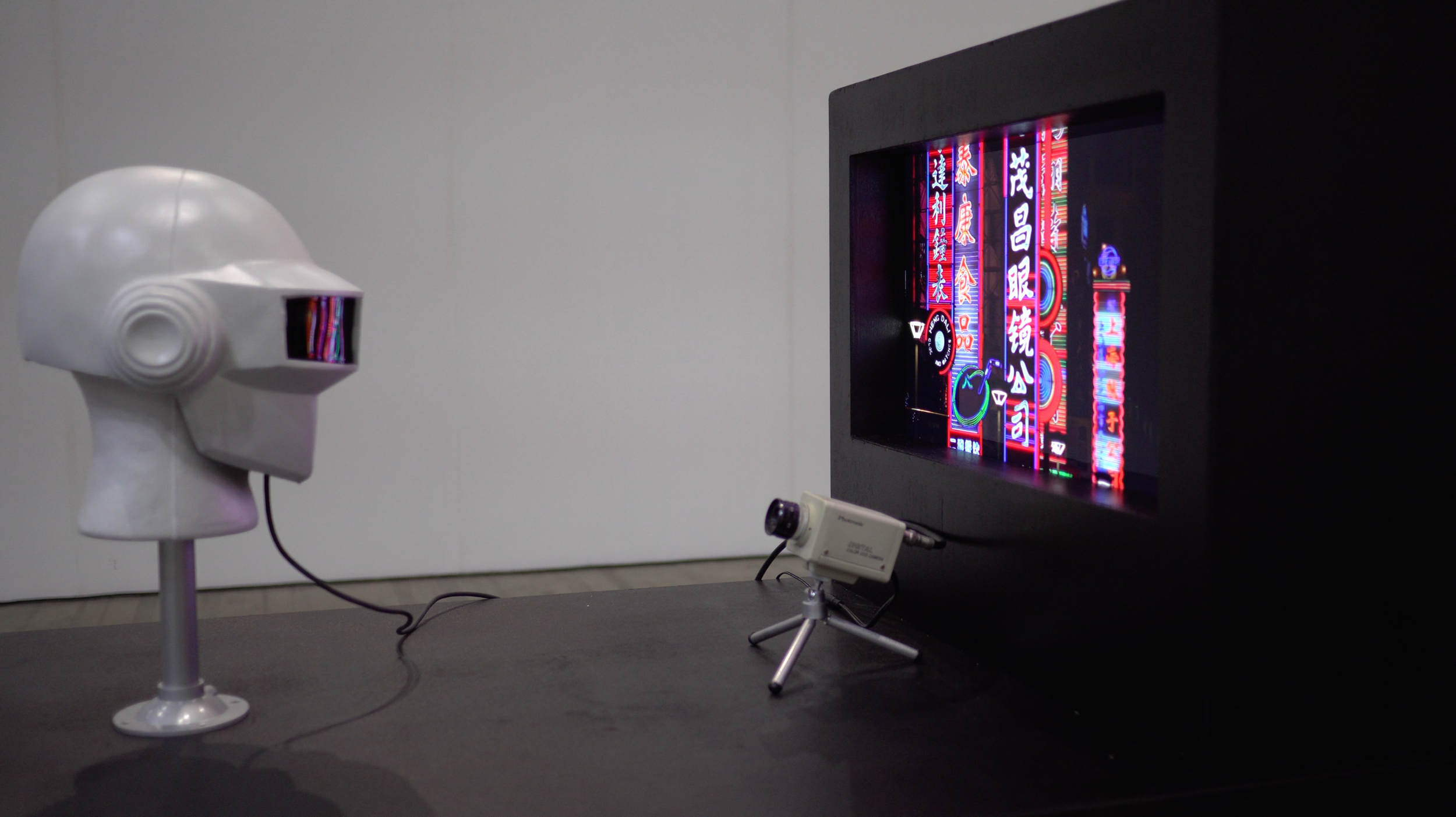

TELL ME WHAT YOU SEE, 2018

VIDEO INSTALLATION

3CH VIDEO, 4CH SOUND, DUMMY HEAD

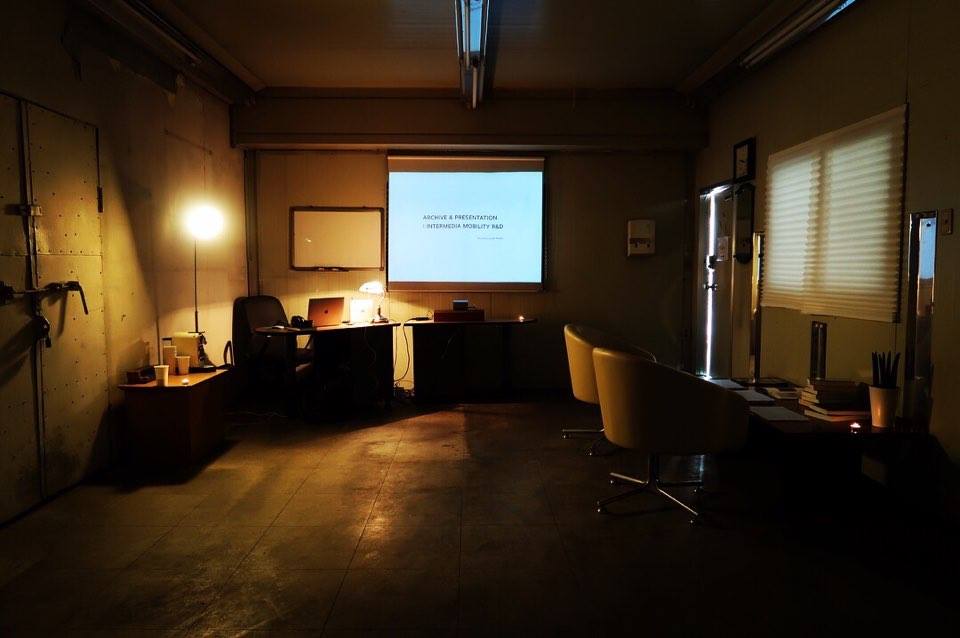

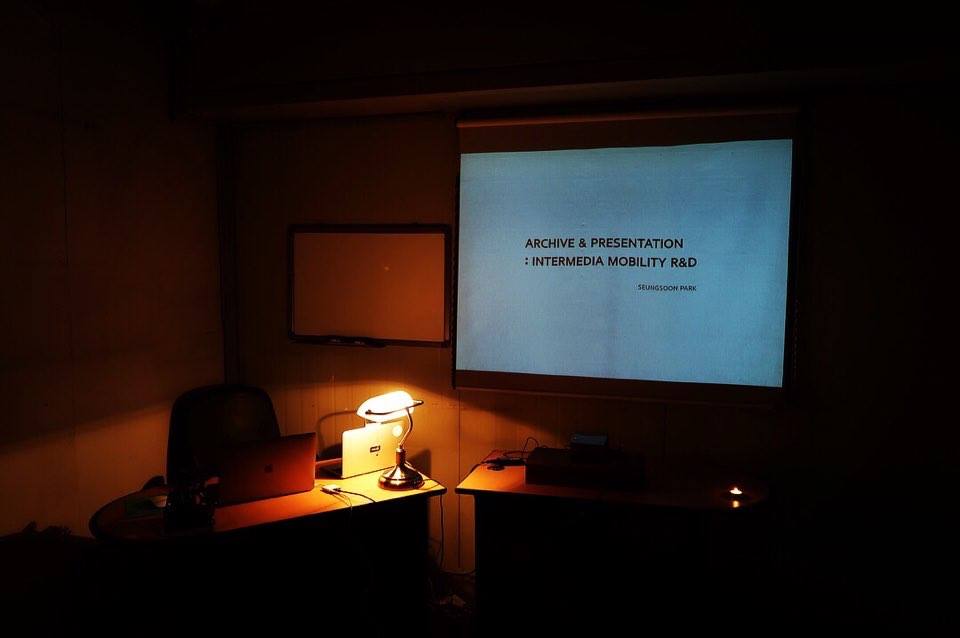

@ACC_R Creators in Lab Showcase, Asia Culture Center, Gwangju, Korea | 2018. 12. 14. - 12. 23.

@2019 Random Access Vol. 4 <NEUROSPACE>, Nam June Paik Art Cetnter, Yongin, Korea | 2019. 7. 18. - 9. 22.

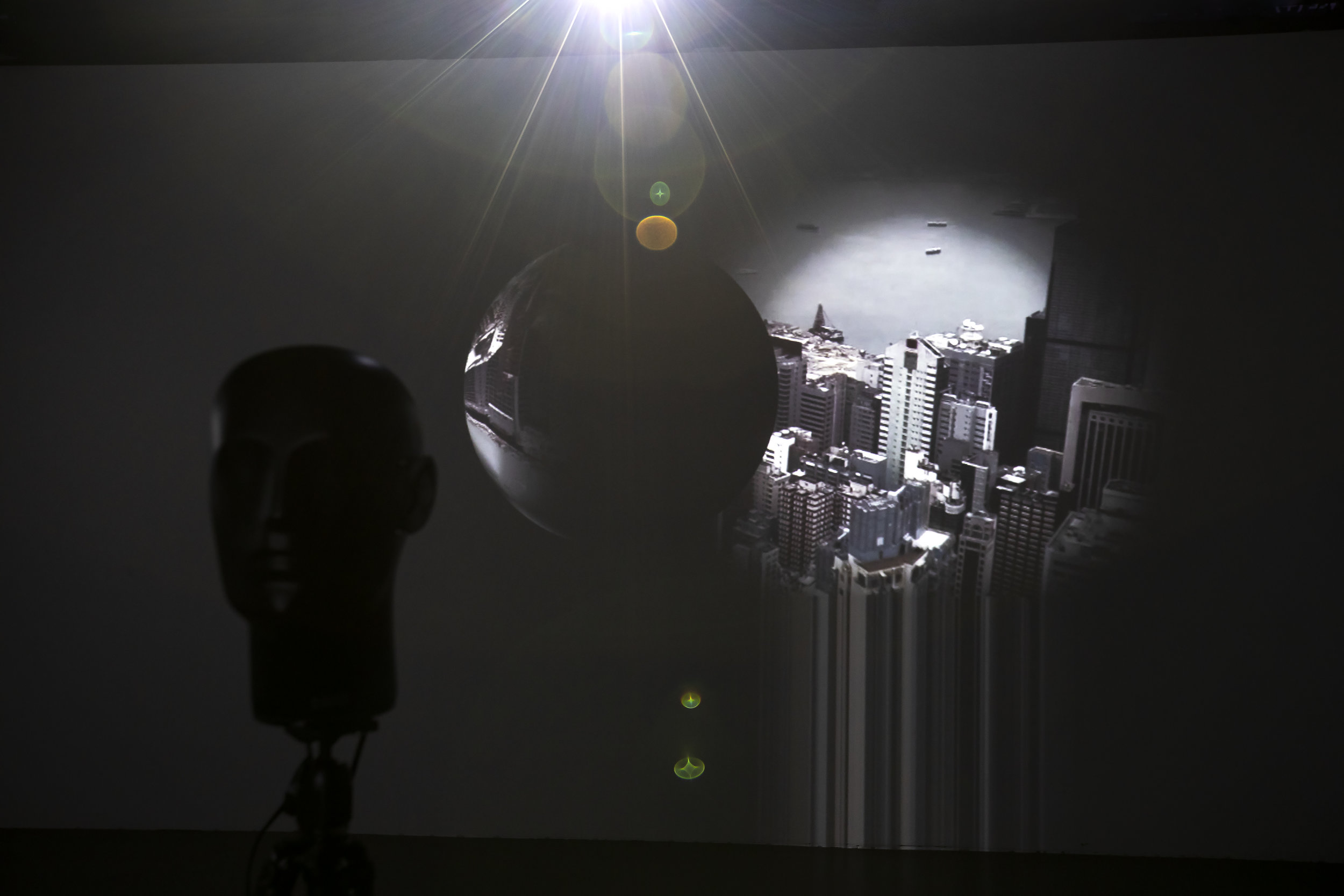

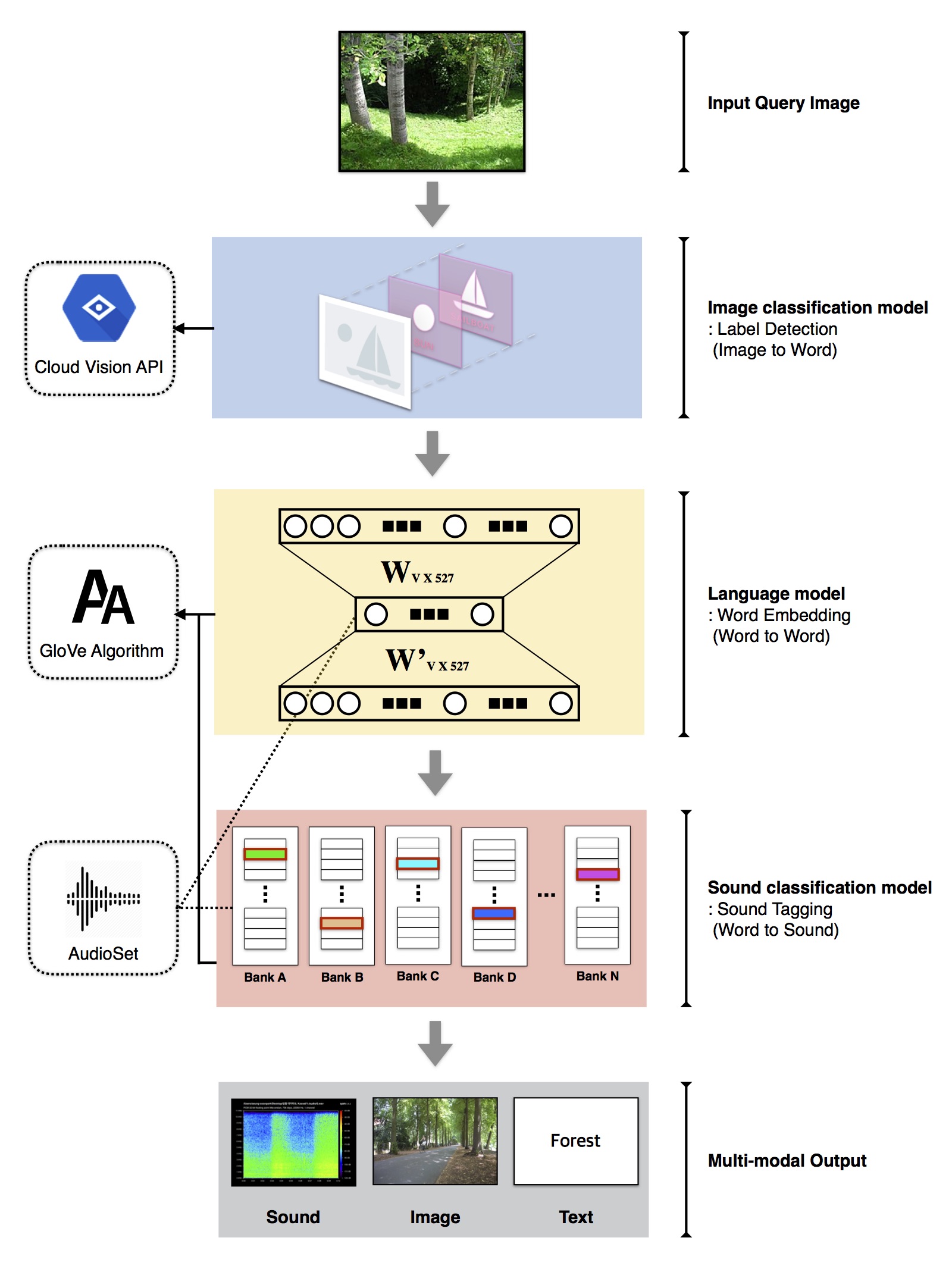

<Tell Me What You See> is an audio-vision artwork with an "auditory game" structure that compares differences that have been heard between the original image and imaginary image by listening to sentences and artificial soundscapes using the NEUROSCAPE AI system. NEUROSCAPE is an artificial intelligence soundscape system developed in 2017 by Seungsoon Park, the composer and cross media artist, and Jongpil Lee, the algorithm developer. It is a system that automatically generates and maps environmental sounds that correspond with the image of nature or an urban city.

This work reverses the sequence of the existing NEUROSCAPE process of "image analysis—word embedding—audio tagging and mapping." The audience first views the results of sentence and label detection outputs analyzed by the computer vision API, the sound mapped via the NEUROSCAPE, and the original image analyzed by the machine. The artist selected a target image based on several global issues to more dramatically express this structure. Although technology has benefited our lives by guiding human life, such as industrialization and urbanization, the natural environment is destroyed and polluted due to human errors such as inequality of wealth, moral defect, environmental destruction, and pollution. In other words, human beings are damaged by other human beings. In particular, as the iceberg fragments melted and flooded due to warming, he reflected on the refugees who were pushed down into the sea.

These narratives raise the problem that artificial intelligence still has limitations in addressing our various social issues. An image can be inferred from text and sounds, such as "a large green field with clouds in the sky," "a person in a cage," "a group of people walking in the rain," "a harbor filled with boats," and "a boy lying on the beach."

What image do you think of?

<Tell Me What You See>는 매체음악가 박승순이 인공지능 사운드스케이프 시스템 뉴로스케이프를 이용한 오디오 비전 아트웍이다. 뉴로스케이프는 2017년 박승순과 이종필(알고리즘 개발자)이 개발한 인공지능 사운드스케이프 시스템으로, 도시나 자연의 이미지에 어울리는 소리를 자동으로 생성 및 매핑하는 방식으로 구성된다.

본 작품은 기존 “이미지 분석-워드 임베딩-오디오 태깅/매핑’으로 이어지는 뉴로스케이프 프로세스를 역순으로 제시한다. 관객은 컴퓨터 비전 API를 통해 분석된 문장 결과를 먼저 보게 되고, 이후 뉴로스케이프 시스템을 이용해 검출된 인공적 사운드스케이프를 감상한다. 마지막으로 컴퓨터가 분석한 타겟 이미지를 제시하여 관객이 문장을 보고 떠올린 이미지와 소리, 인공적 소리를 듣고 떠올린 장면(이미지), 실제 이미지 간 차이를 비교하며 감상한다.

이러한 구조를 보다 극적으로 표현하기 위해, 분석 타겟 이미지를 몇가지 주제를 토대로 선별하였다. 기술이 인간의 삶과 산업화, 도시화 등을 이끌며 우리의 삶을 이롭게 해주었지만, 부의 불평등, 도덕 결함, 환경 파괴 및 오염 등 인간이 저지르고 있는 실수들로 인해 자연 환경이 파괴되고 오염되며 자연재해를 통해 역으로 피해를 보게 되었다. 특히 온난화로 인해 빙산의 조각이 녹아 떠내려가는 모습에서, 바다에 떠밀려 내려가는 난민들을 떠올렸다.

이러한 내러티브를 통해 인공지능 기술이 아직 우리의 여러 사회 문제를 다루기에는 한계점을 지니고 있다는 문제점을 제기한다.

“하늘에 구름이 있는 큰 녹지” “새장 안의 사람”, “빗속에서 산책하는 사람들”, “배가 가득 찬 항구”, “해변에 누워있는 소년”.